Loss

Exploring the Landscape

Deep learning Explorers

MOVING LANDS

STILL LANDS

METHOD

Human GPT Quick trailer of the pioneering “Human GPT” performance that I recently designed and directed for the European AI Congress Watch

Movie Launch: Hamelin 77, our movie production that pioneered the use of Gen AI techniques in cinema launches in Amazon Prime Video (Europe/UK now and USA from September) Watch | Trailer

NEW paper: SplInterp: Improving our Understanding and Training of Sparse Autoencoders (Jeremy Budd, Javier Ideami, Benjamin Macdowall Rynne, Keith Duggar, Randall Balestriero) Access Paper

NEW AI talks: In Morocco (EO Org), Andorra (NextAI Summit), Barcelona, and other locations Watch Ideami in action

NEW Article: Growing an artificial mind from scratch: Scale free Active Inference Read full article

AI Symphonic Concert: AI Music + Videos by Javier Ideami. Music performed by ADDA Simfónica conducted by Josep Vicent. Watch Concert

Into the AI Matrix Viz: Exploring visually and with real data the attention matrices of an LLM.Watch

NEW course: LLM Mastery: Hands-on Code, Align and Master LLMs: 80% practical coding / 20% theory including a unique Origami + AI section

- Scale free Active Inference, towards a more sustainable and explainable AI

- Loss Landscapes and the Blessing of Dimensionality

- The Tower of Mind, towards a better ChatGPT

- Every prompt matters

- Towards a sustainable generative AI revolution

- X-Ray Transformer

- Towards the end of deep learning and the beginning of AGI

- RosettaMind Refresh your mind by challenging it. Your collective memory garden powered by science and AI Explore

- LL Explorer 1.1 is a free tool to explore loss landscapes of deep learning optimization processes.

- The Geniverse: One of the first generative AI platforms in the world. Active until mid-2023, it represented the first phase of what has now evolved into Krea.ai, a leading generative AI company based in Silicon Valley.

- Lucy 1.0 Lucy visualizes the parameters of neural networks in real time.

- Ida: The Human-AI alignment issue Trailer

- Hamelin 77: Award winning movie, one of the first dealing with prompt eng & gen AI Trailer

- 99 : The challenges of human-AI interaction Trailer

- Explorers of the Infinite, @ III European AI Forum (encuentrosnow.es)

- Creativity + AI, @ MEPA Unleashed, EO Bahrain

- Road to AGI/ASI, @ IIA (iia.es)

- The Tower of Mind, towards a better ChatGPT, @ Roams (roams.es) & IIA (iia.es)

- Encounters of the third kind with Generative AI, @ Asturias Power

- From Flatland to the Trillion dimensional space, @ Strive School

- Loss landscapes and the flatland perspective, @ Weights & Biases Deep Learning Salon

- AI Loss Landscape Visualization @ Synthetic Intelligence Forum, Toronto

- LLM Mastery: Hands-on Code, Align and Master LLMs, click link to visit

- Generative A.I., from GANs to CLIP, with Python and Pytorch, click link to visit

- Introduction to Generative AI, click link to visit

- Featured on cover of doctoral thesis – Machine Learning for Predicting Cancer Endpoints from Bulk Omics Data – by Sören Stahlschmidt : Open Access in DiVA

- Featured on workshops led by John Urbanic for the National Science Foundation in USA : Workshop details

- Featured on the cover of the new AIM AI Microsoft Research Project : https://www.microsoft.com/en-us/research/project/aim/

- Featured on Pytorch documentation : https://pytorch.org/blog/pytorch-1.6-now-includes-stochastic-weight-averaging/

- Mentioned @ MDPI: A Survey of Advances in Landscape Analysis for Optimisation (Dept of Decision Sciences, University of South Africa): https://mdpi.com/1999-4893/14/2/40/pdf

- A losslandscape.com project piece in the cover of the thesis by Martin Van Der Shelling (A data-drive heuristic decision strategy for data-scarce optimization, with an application towards bio-based composites).

- A losslandscape.com piece featured in book & paper by Simant Dube

- Ongoing collaborations with researchers from MIT, NYU, Landskape research group & other groups and institutions.

- Ideami A.I gallery, click link to visit

- Ideami @ Fine Art America, click link to visit

- Loss landscape NFT collection, click link to visit

- Neuroscience NFT collection, click link to visit

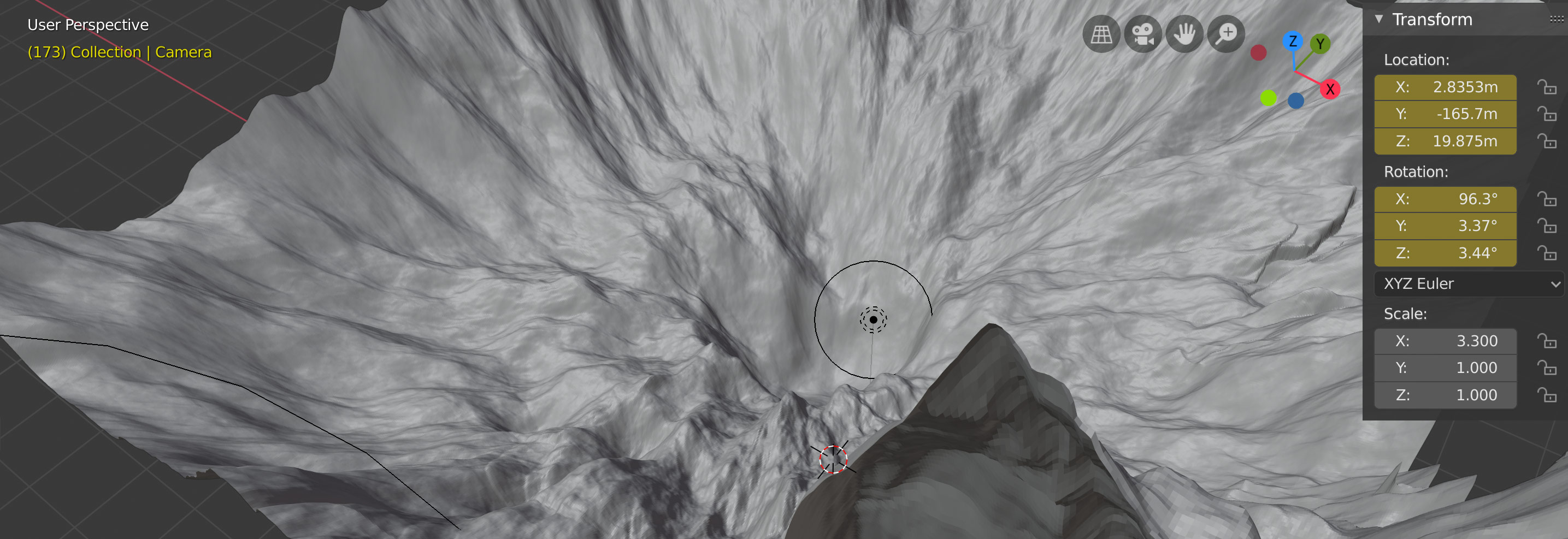

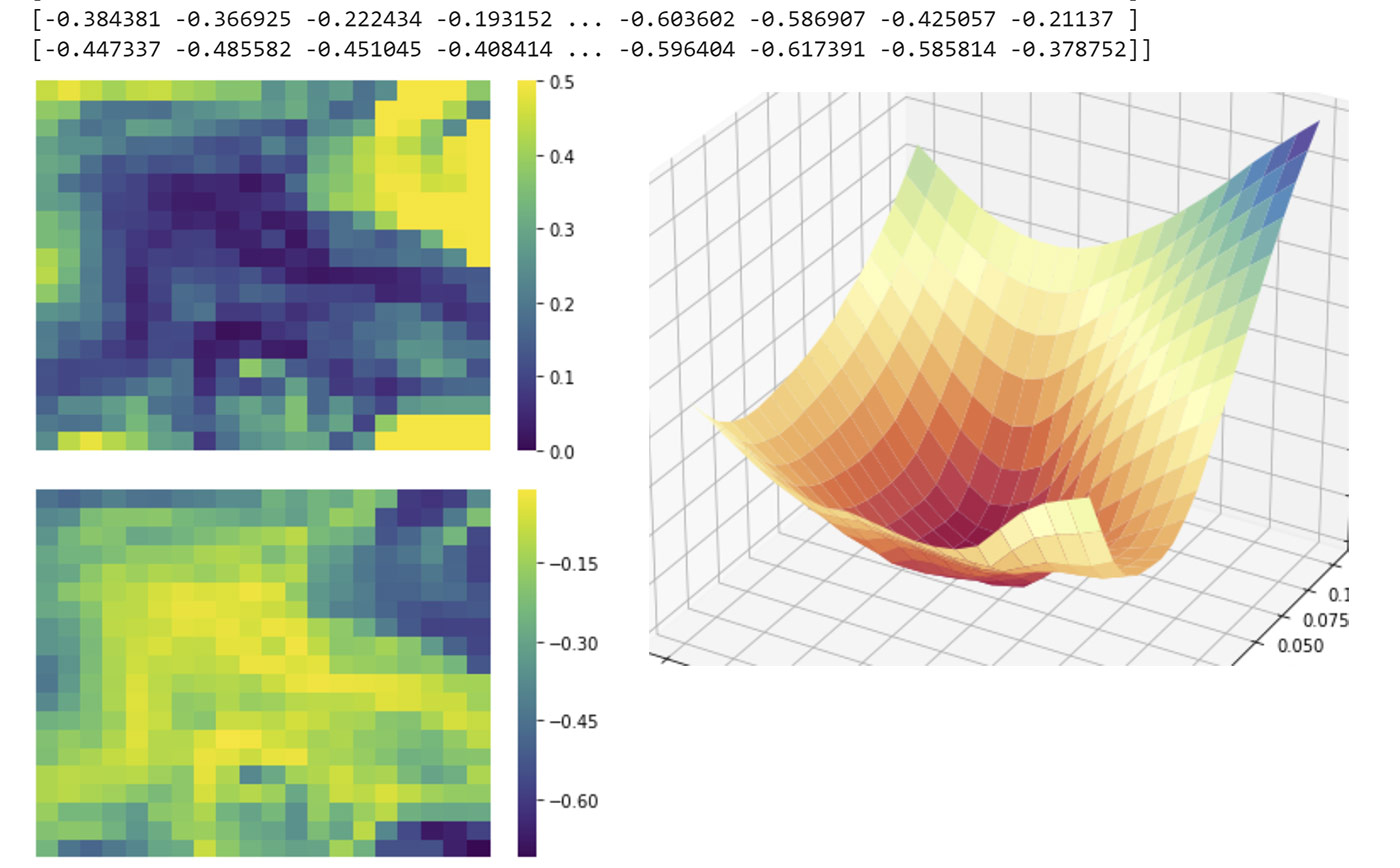

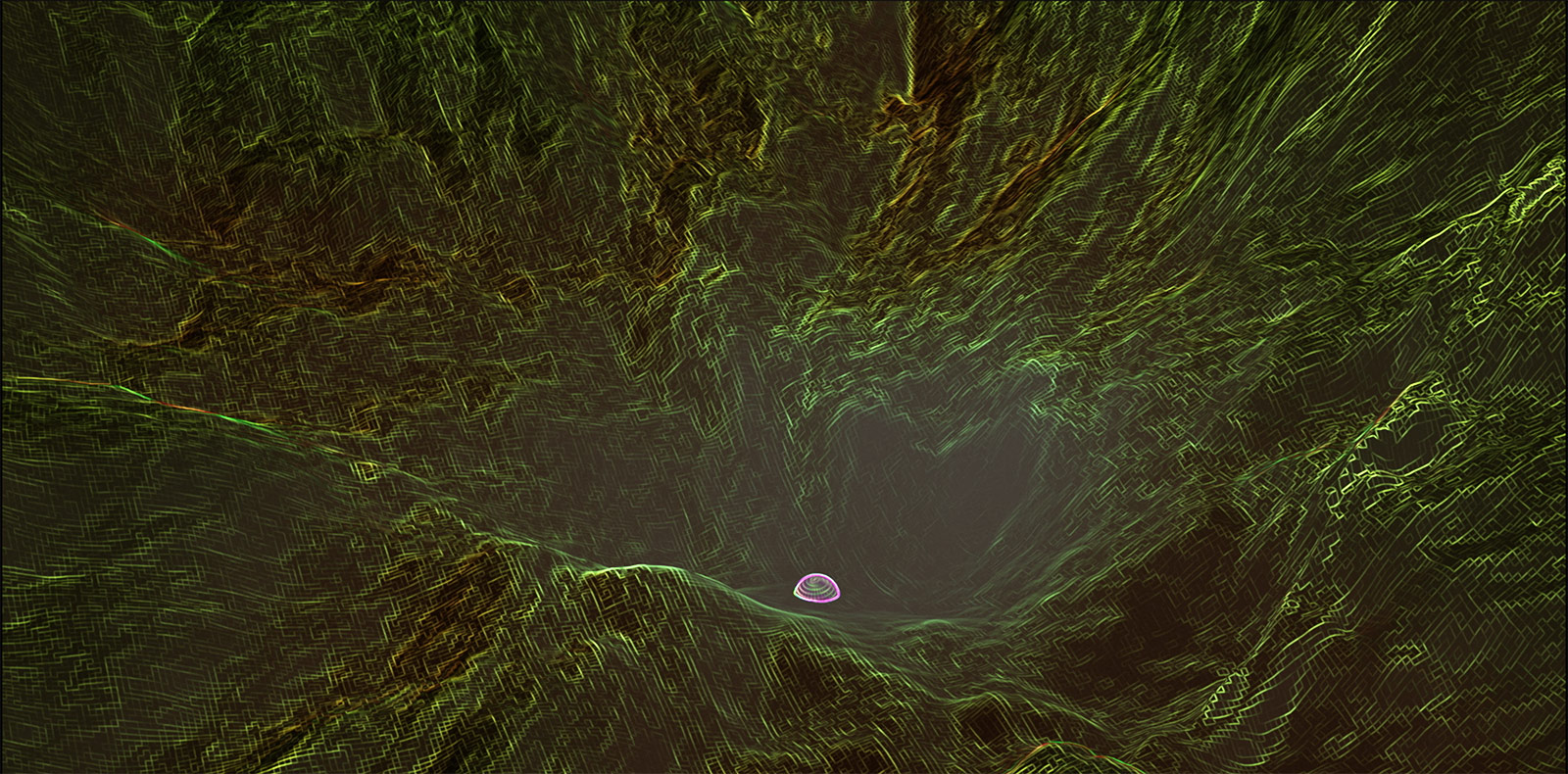

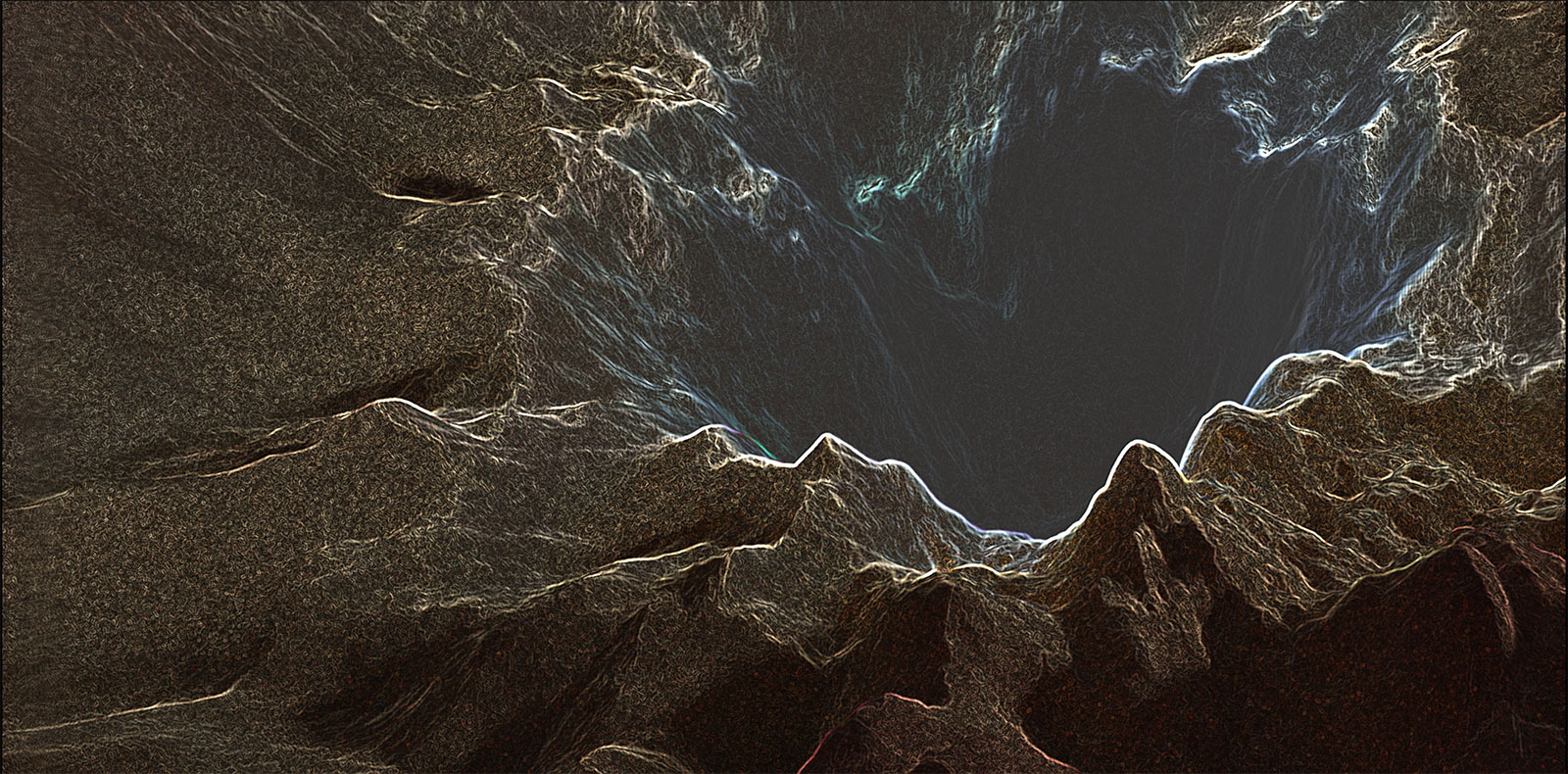

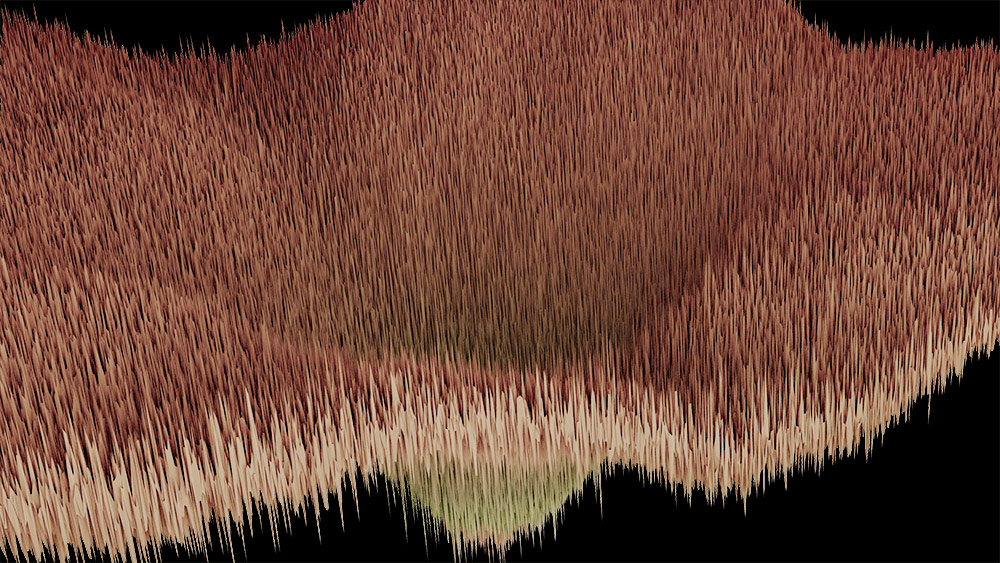

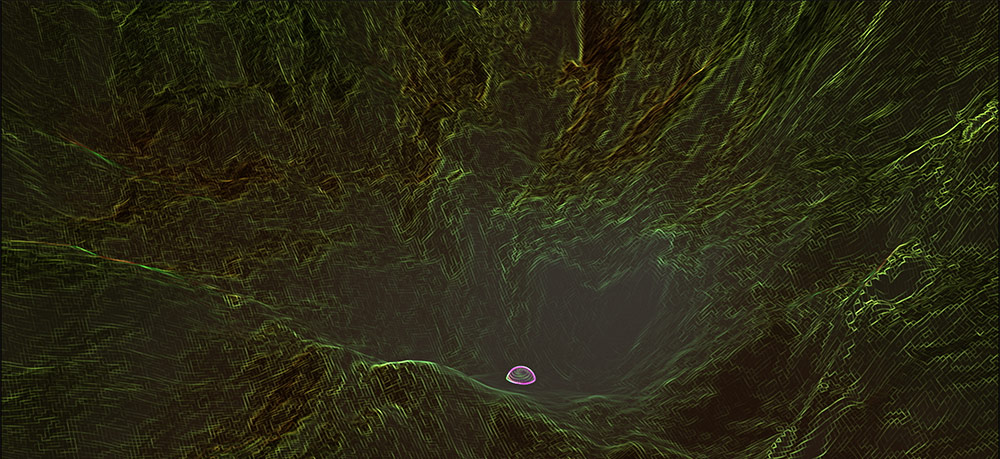

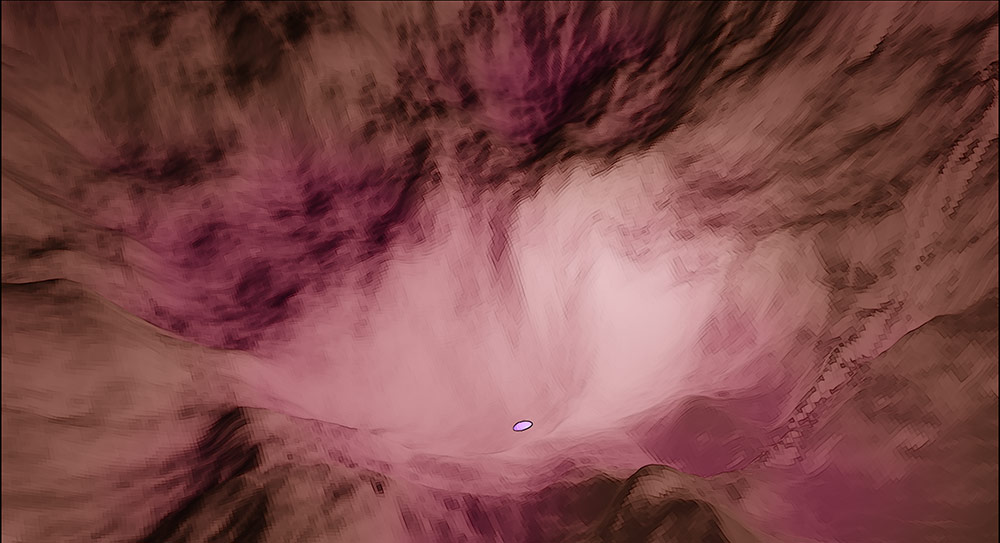

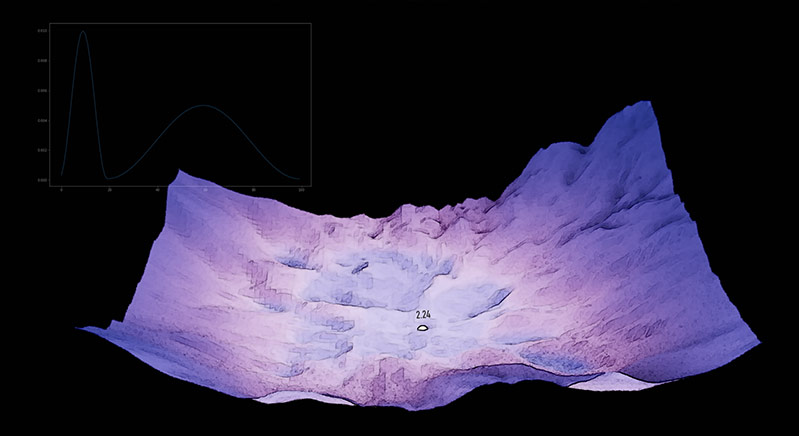

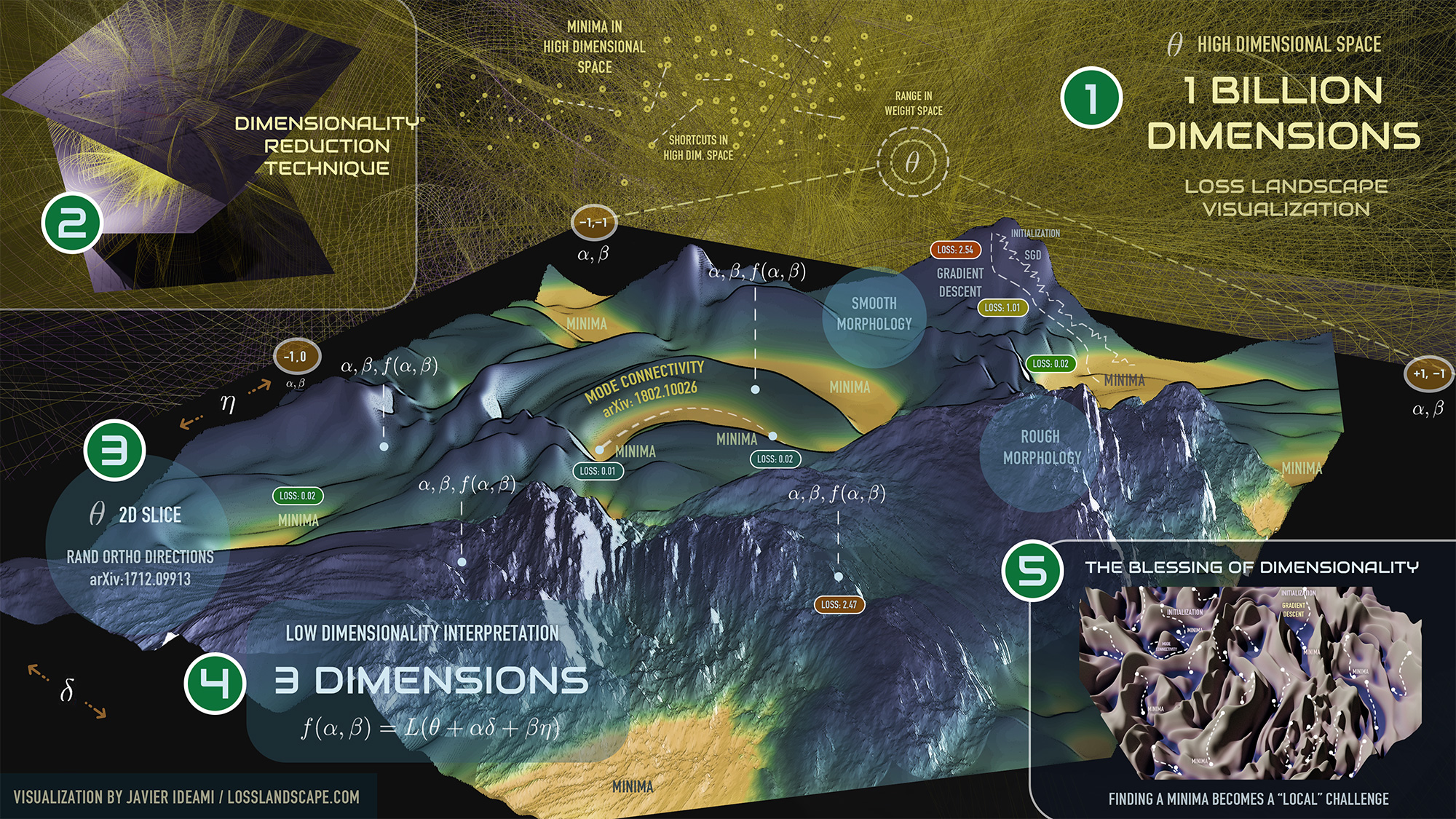

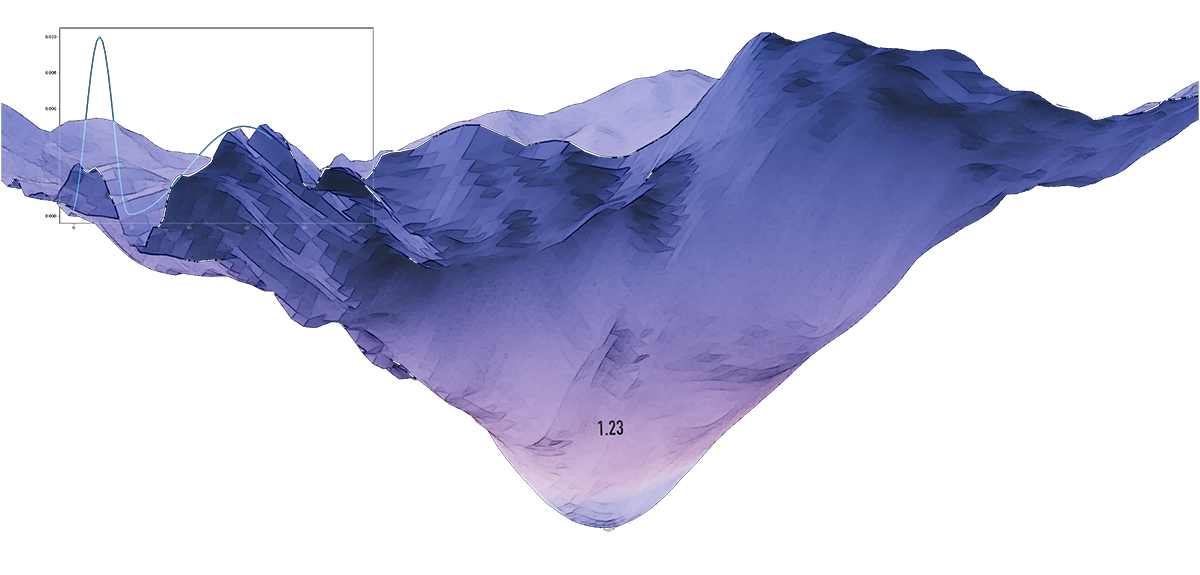

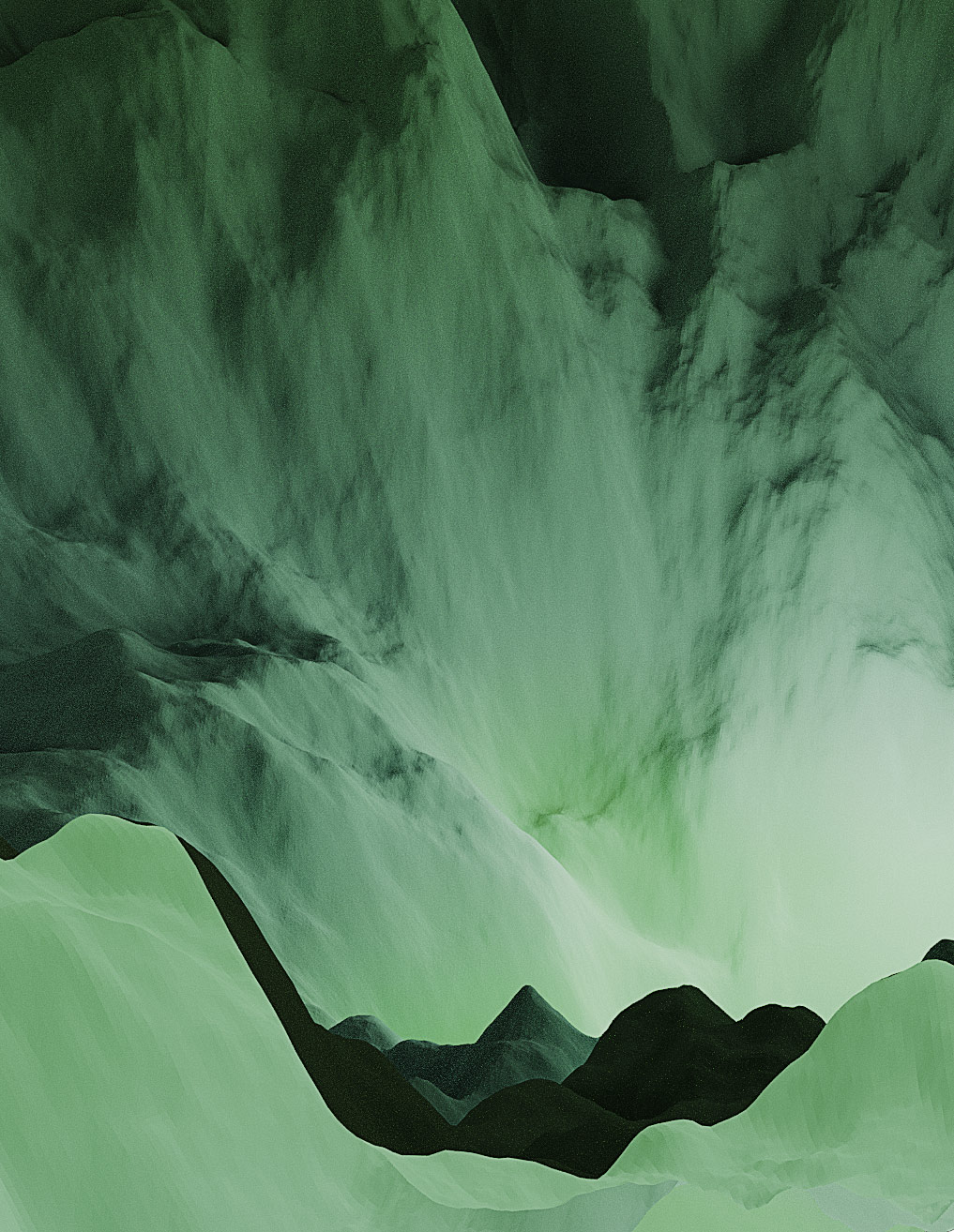

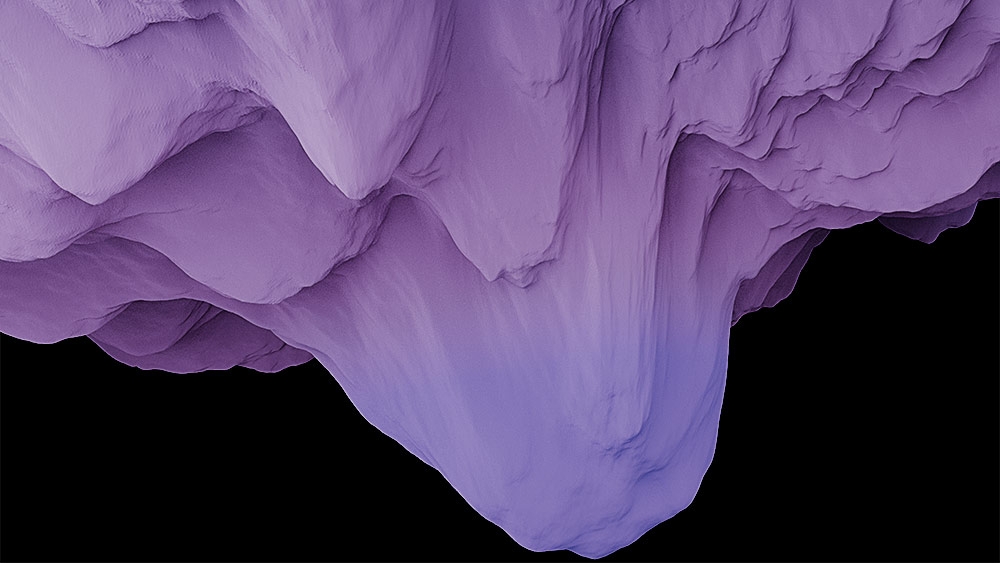

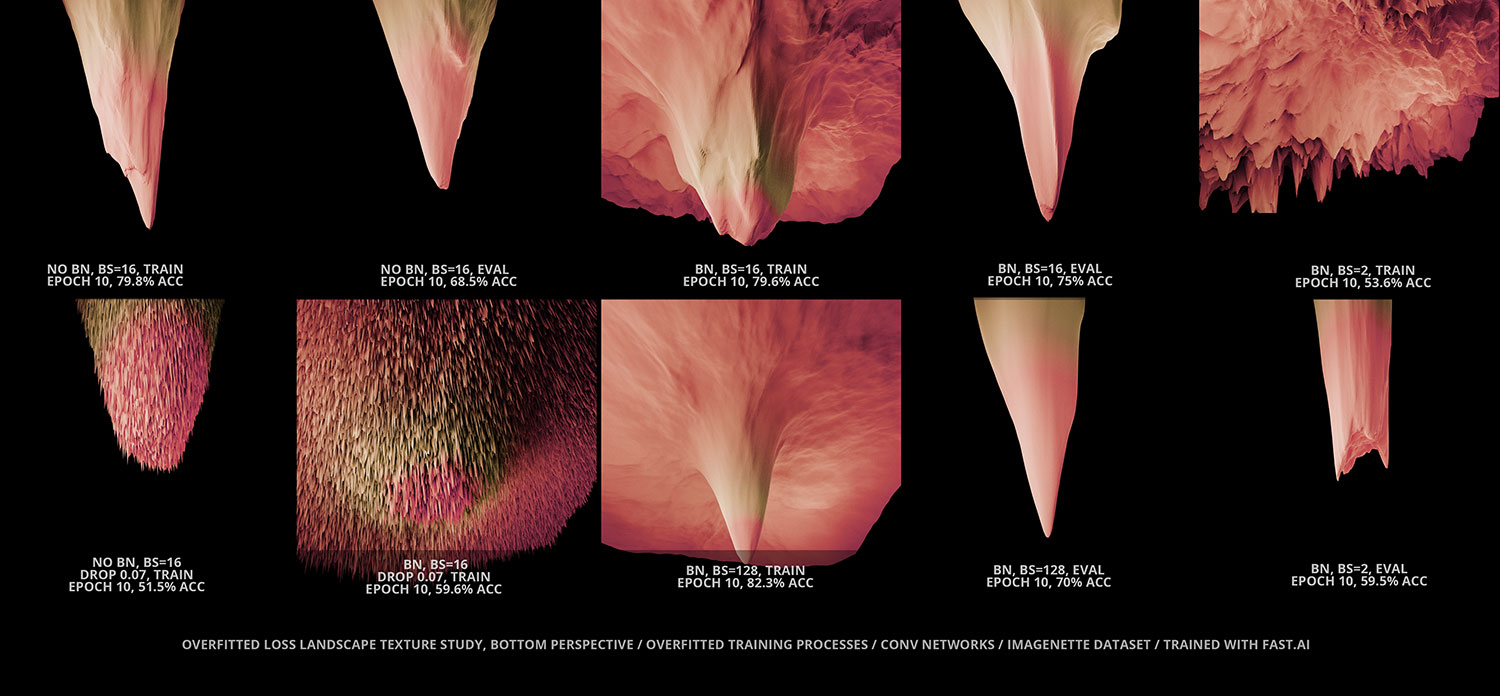

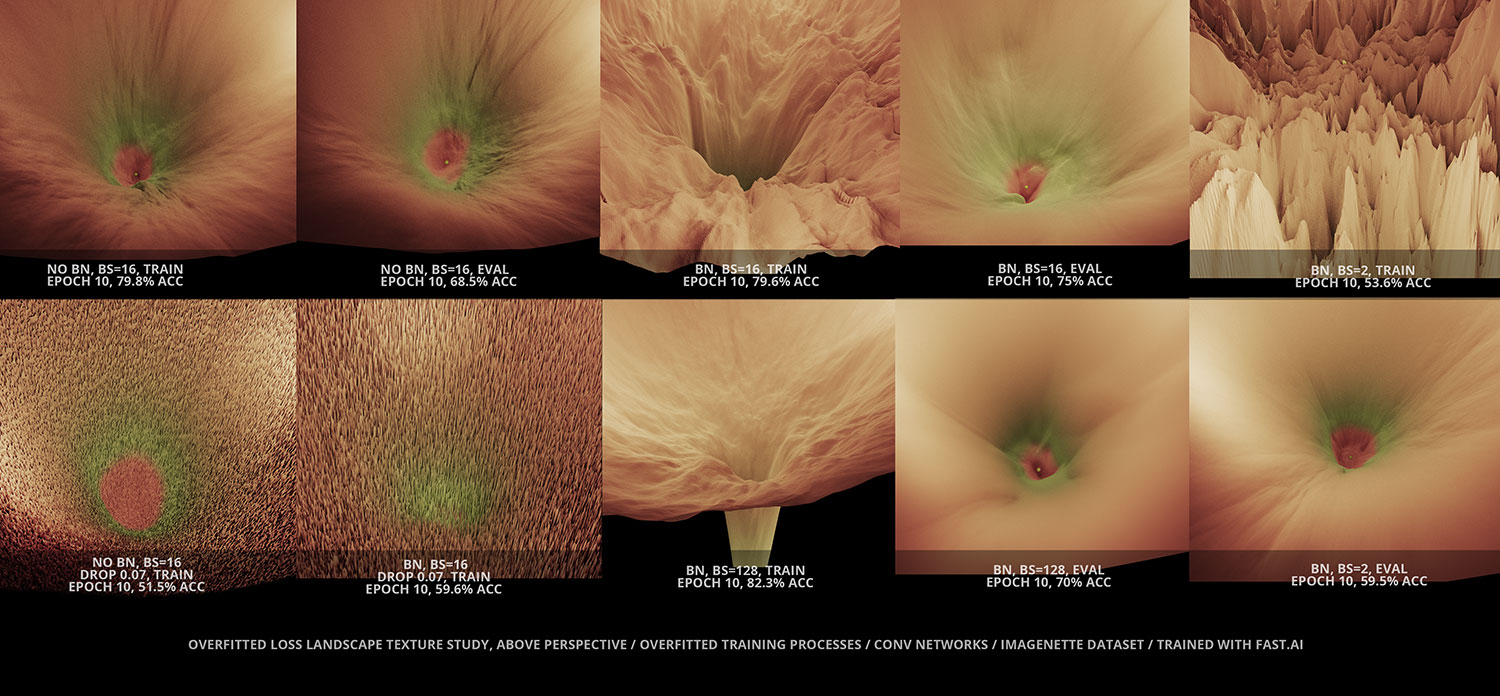

In the intersection between research and art, the A.I LL project explores the morphology and dynamics of the fingerprints left by deep learning optimization training processes. The project goes deep into the training phase of these processes and generates high quality visualizations, using some of the latest deep learning and machine learning research and producing inspiring animations that can both inform and inspire the community. As the weight space changes through the optimization process, loss landscapes become alive, organic entities that challenge us to unlock the mysteries of learning. How do these multidimensional entities behave and change as we modify hyperparameters and other elements of our networks? How can we best tame these wild beasts as we cross their edge horizon on our way to the deepest convexity they hold?

LL is led by Javier Ideami, researcher, multidisciplinary creative director, engineer and entrepreneur. Contact Ideami on ideami@ideami.com

THE LATEST

Some of the latest news and visualizations of the LL project. For more, check the moving lands and still lands areas.

- Simulate descent trajectories down the gradients

- Live tweaking of descent rate, add stochasticity & other settings

- Draw trajectories that stick to the landscapes

- View gradient direction/magnitude

- Save snapshots with a variety of styles

- Access information about each surface

- Works from any device and no login is required

Acccess it on losslandscape.com/explorer

HAIRMONY. Full video of the pioneering AI symphonic concert we co-created at the III European AI Forum in Spain, combining the humanity an talent of ADDA Simfònica and Josep Vicent, with the AI works I produced to generate the music and its synchronized videos.

Javier is the Co-Founder of Krea (formerly Geniverse). Geniverse was one of the first generative AI platforms in the world, featuring text-to-image and text-to-video capabilities using multiple architectures. It pioneered a unique flexible canvas interface that allowed users to interact with multiple generative AI models. Active until mid-2023, it marked the first phase of what has now evolved into Krea.ai, a leading generative AI company based in Silicon Valley.

Lucy 1.0 Lucy visualizes the parameters of neural networks in real time. As the neural network trains, its parameters are captured and streamed through a flask API towards the visualization system.

Journey to the center of the neuron A guided visualization exercise exploring biology + AI. Directed by Javier Ideami.

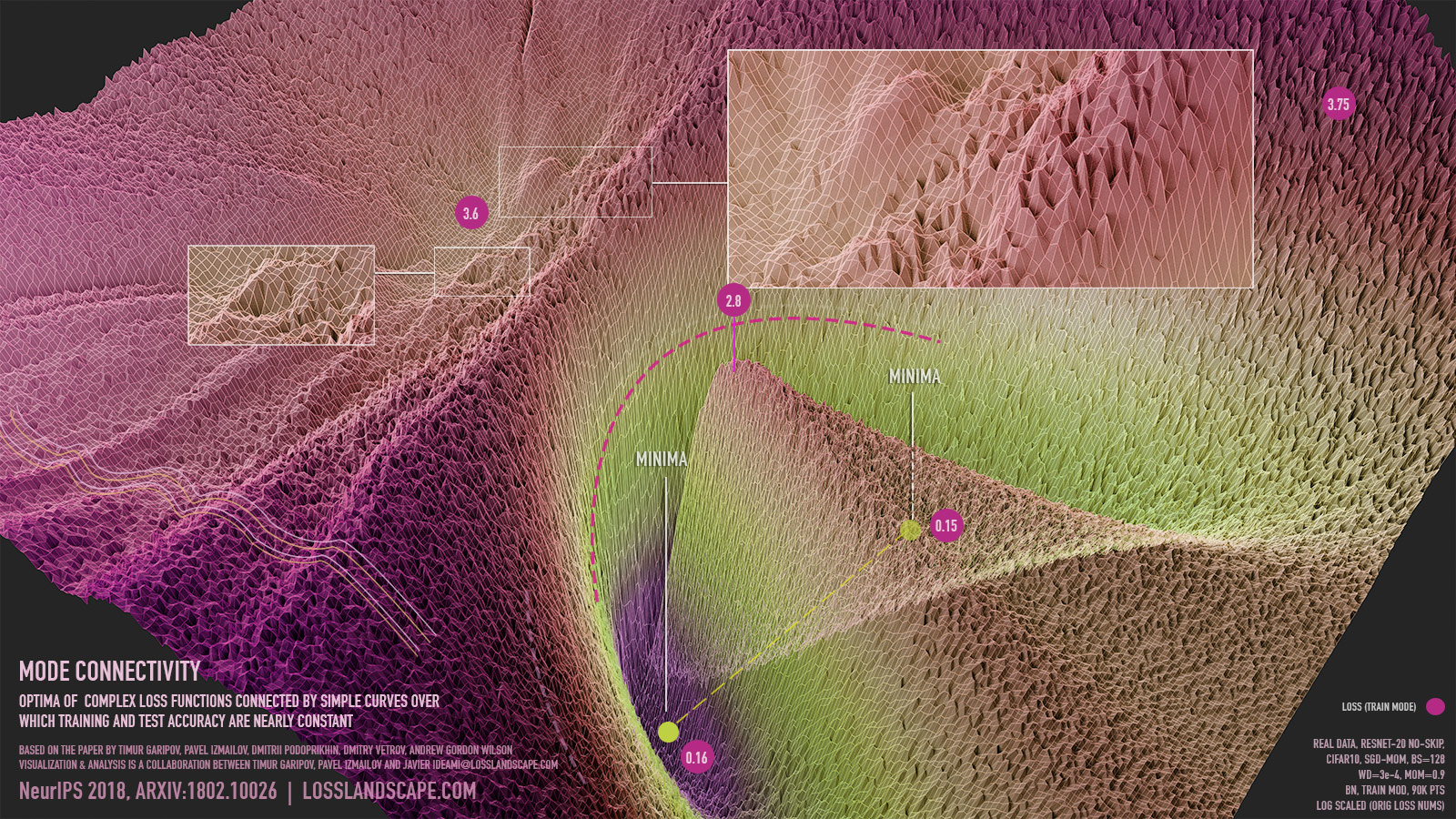

ICARUS Mode Connectivity. NeurIPS 2018 ARXIV/1802.10026. Optima of complex loss functions connected by simple curves over which training and test accuracy are nearly constant. Icarus uses real data and showcases the training process that connects two optima through a pathway generated with a bezier curve. To create ICARUS, 15 GPUs were used over more than 2 weeks to produce over 50 million loss values. The entire process end to end took over 4 weeks of work.

As Wikipedia states, “In Greek mythology, Icarus is the son of the master craftsman Daedalus, the creator of the Labyrinth. Icarus and his father attempt to escape from Crete by means of wings that his father constructed from feathers and wax.”. We can think of the loss landscape as another labyrinth where our “escape” is to find a low enough valley, one of those optimas we are searching for. But this is no ordinary labyrinth, for ours is highly dimensional, and unlike in traditional labyrinths, in our loss landscape it is possible to find shortcuts that can connect some of those optima. So just as Icarus and his father make use of special wings to escape Crete, the creators of the paper combine simple curves (a bezier in this specific video) and their custom training process to escape the isolation between the optima, demonstrating that even though straight lines between the optima must cross hills of very high loss values, there are other pathways that connect them, through which training and test accuracy remain nearly constant. On top of the above, the morphology of the two connected optima in this video, also resembles a set of wings. These wings come to life in the strategies used by these modern “Icarus” like scientists as they find new ways to escape the isolation of the optima present in these kinds of loss landscapes.

Visualization data generated through a collaboration between Pavel Izmailov (@Pavel_Izmailov), Timur Garipov (@tim_garipov) and Javier Ideami (@ideami). Based on the NeurIPS 2018 paper by Timur Garipov, Pavel Izmailov, Dmitrii Podoprikhin, Dmitry Vetrov, Andrew Gordon Wilson: https://arxiv.org/abs/1802.10026 | Creative visualization and artwork produced by Javier Ideami.

DROP visualizes changes produced in the loss landscape as the dropout hyperparameter is gradually increased. Loss Landscape generated with real data: Convnet, imagenette dataset, sgd-adam, bs=16, bn, lr sched, train mod, 250k pts, 20p-interp, log scaled (orig loss nums) & vis-adapted, When analyzing the loss landscape generated while increasing dropout, we see a noise layer gradually taking over the landscape, a layer that is disruptive enough to help in preventing overfitting and the memorization of paths and routes across the landscape, and yet not disruptive enough to prevent convergence to a good minima (unless dropout is taken to extreme values). More variations of this visualization as well as images and videos of other visualizations are available at the Moving Lands and Still Lands galleries.

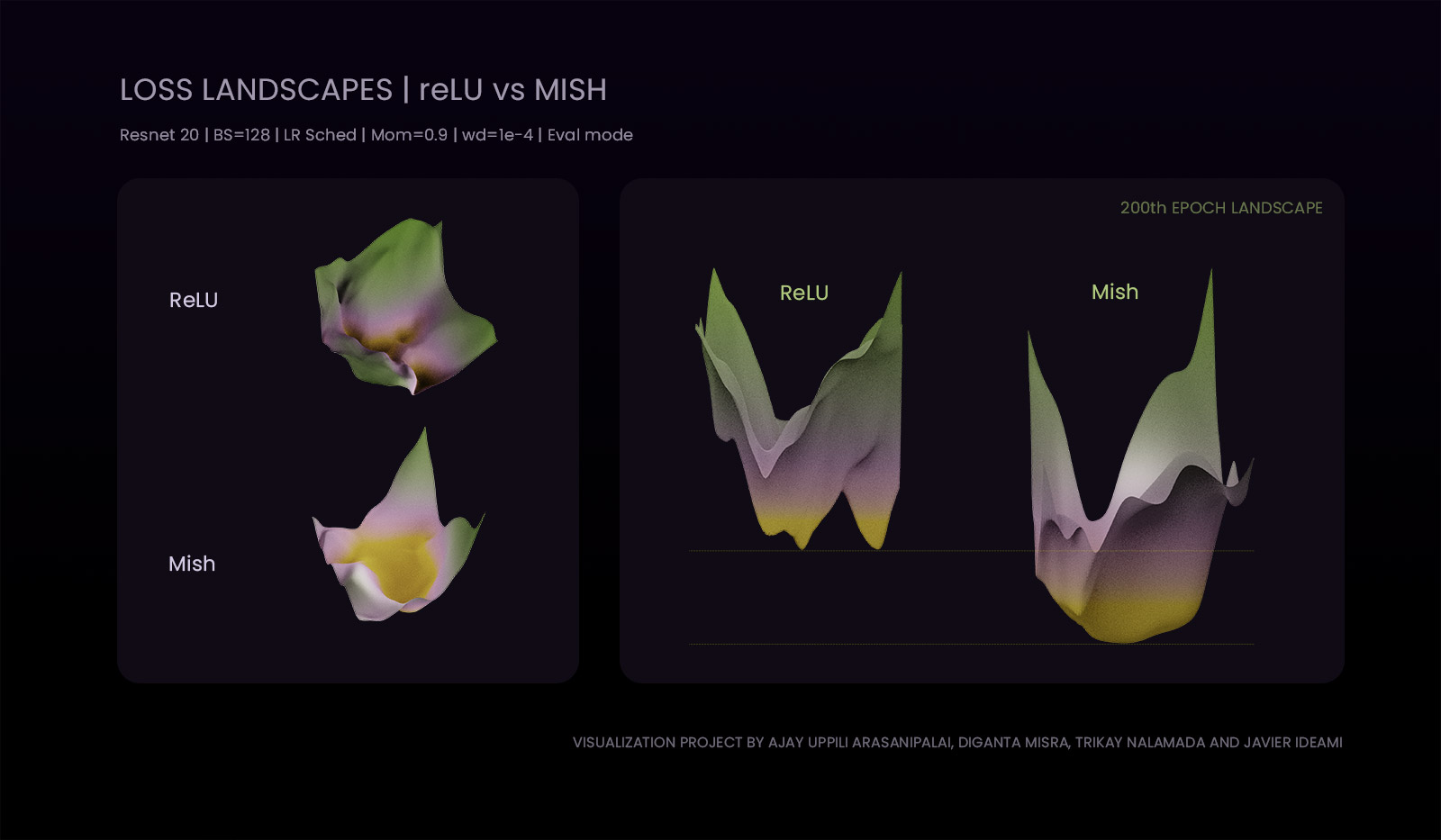

CROWN | Comparison study between the loss landscapes of the ReLU, Mish and Swish activation functions during the 200th epoch of the training of a Resnet 20 network. Resnet 20 | BS=128 | LR Sched | Mom=0.9 | wd=1e-4 | Eval mode. A colaboration by Diganta misra, Ajay uppili arasanipalai, Trikay nalamada and javier ideami, as part of the Landskape deep learning research group projects. More variations of this visualization as well as images and videos of other visualizations are available at the Moving Lands and Still Lands galleries.

“Sounds of a million souls” is a 2 minute artistic tribute to our cortical columns and the billions of neurons in the neocortex (8K quality). Set the youtube settings to 4K or 8K resolution + full screen for the best experience. “And as we approach the magnificent column, the mysterious pattern calling us from afar with the sounds of a million souls… I sense that the brightest sun is compressed in those tiny specks of wonder.. reduced to a tapestry of dreams that resonate in our consciousness.. And I hear you laugh.. I hear you fall… I hear your tears devastate the horizons.. until we merge at the center of the column where silence awaits.. Silence, and then the million suns spiking towards the awakening of a new existence.. Hold me tight.. and let’s dive right in, right into the center of the column.. where you and I are one in silence..” – by Javier Ideami.

Read the related article “Journey to the center of the neuron” on https://towardsdatascience.com/journey-to-the-center-of-the-neuron-c614bfee3f9

X-Ray Transformer Infographic. Dive into transformers training & inference computations through a single visual. Download the larger 10488 x 14000 pixels version at the github repo on: https://github.com/javismiles/X-Ray-Transformer. Even larger versions will be launched soon. The X-Ray Transformer infographic allows you to make the journey from the beginning to the end of the transformer’s computations in both the training and inference phases. Its objective is to achieve a quick and deep understanding of the inner computations of a transformer model through the analysis and exploration of a single visual asset.

DROP visualizes changes produced in the loss landscape as the dropout hyperparameter is gradually increased. See extended description below the related video on this same page. More variations of this visualization as well as images and videos of other visualizations are available at the Moving Lands and Still Lands galleries.

ReLU-Mish-Swish. Loss Landscape Morphology Studies. Project by Ajay uppili arasanipalai, Diganta misra, Trikay nalamada and Javier ideami within the Landskape deep learning research group projects. More variations available at the gallery.

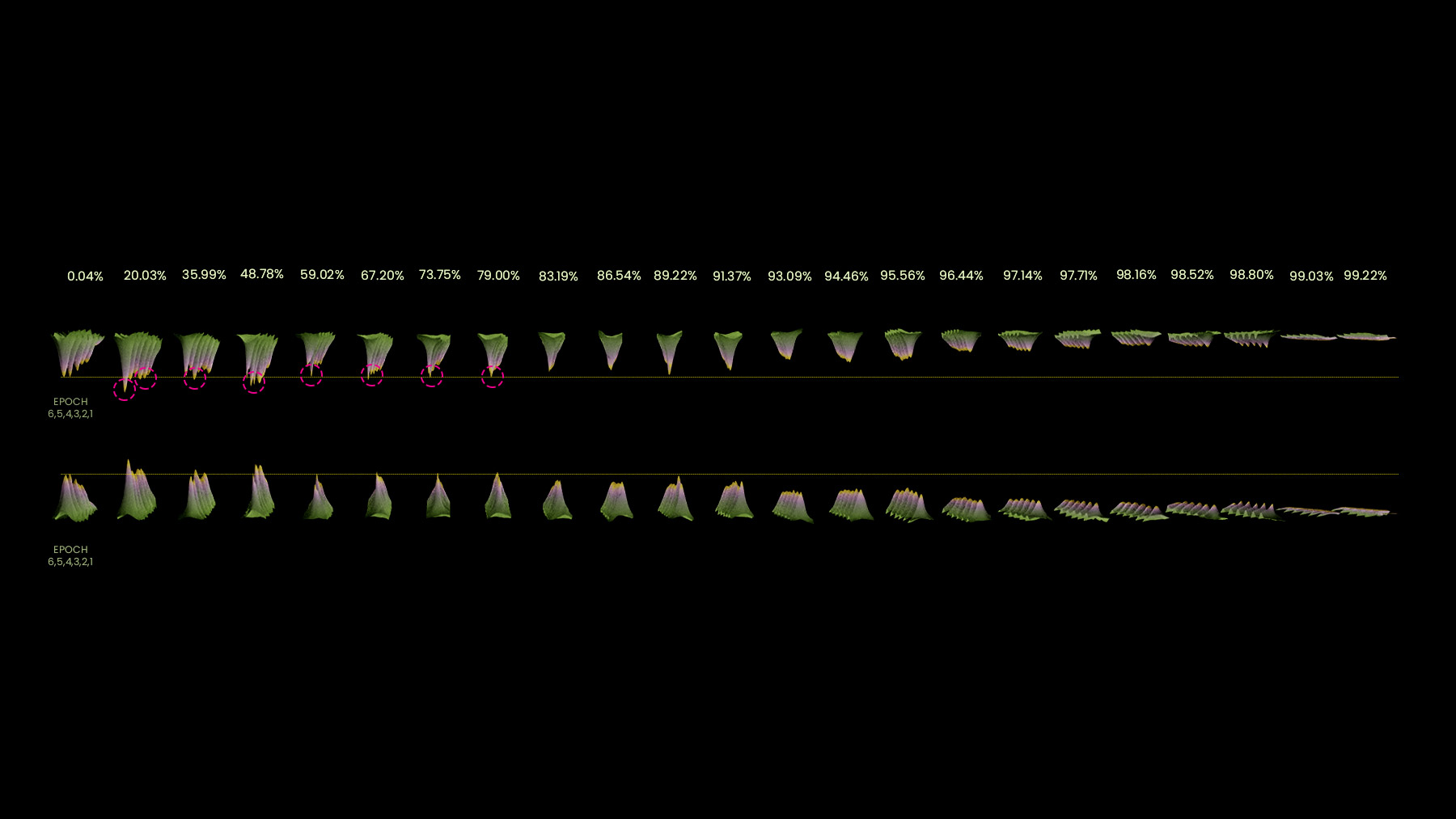

LOTTERY visualizes the performance of a Resnet18 (Mnist dataset) as the weights of the network are gradually being pruned (based on arxiv:1803.03635 by jonathan frankle, michael carbin). See extended description below the related video on this same page. More variations of this visualization as well as images and videos of other visualizations are available at the Moving Lands and Still Lands galleries.

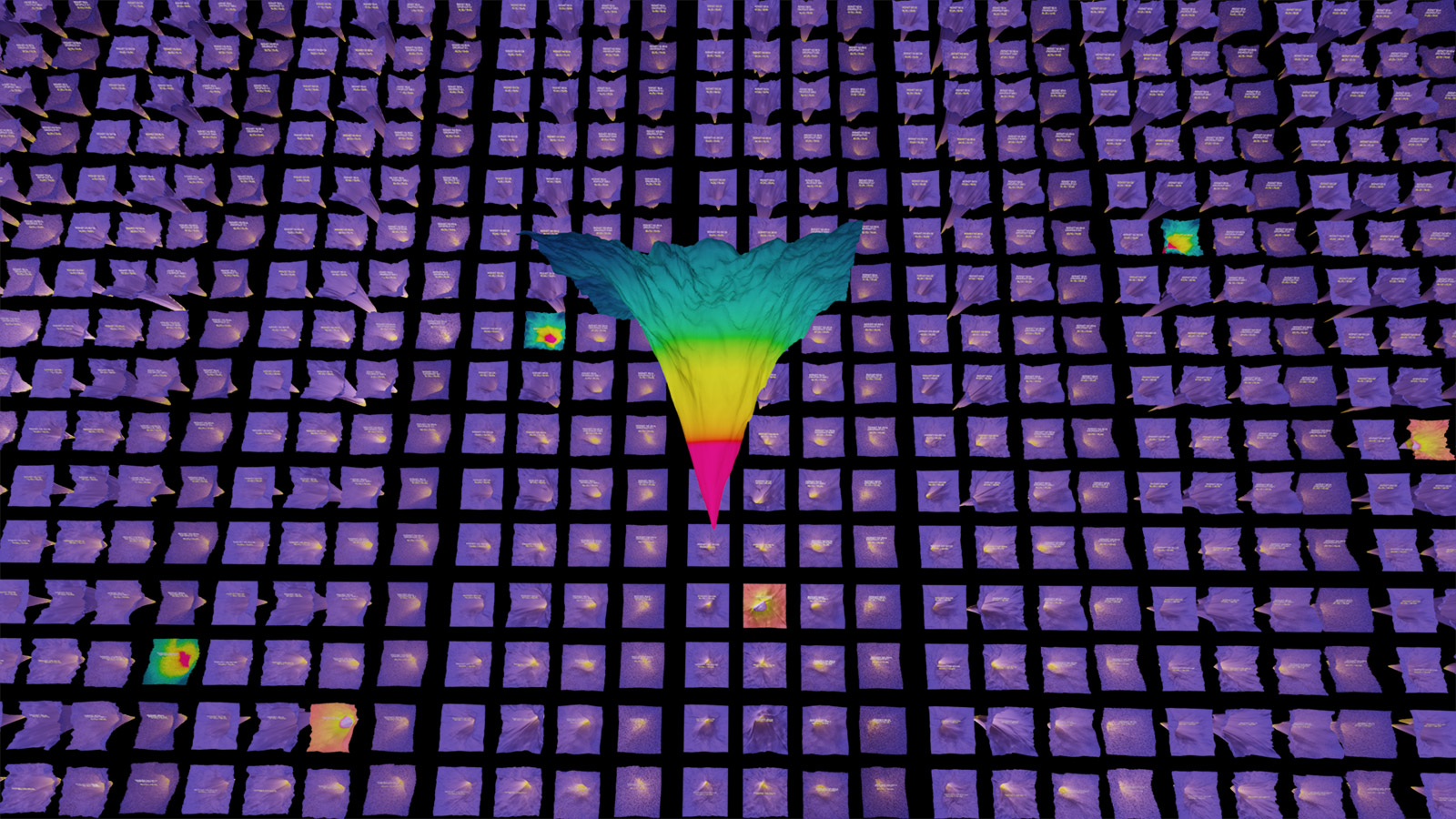

LL Library visualizes a concept prototype for a library of loss landscapes. The loss landscapes featured are created with real data, using Resnet 20 arquitectures, with batch sizes of 16 and 128 and the Adam optimizer. This is part of an ongoing project. More variations of this visualization as well as images and videos of other visualizations are available at the Moving Lands and Still Lands galleries.

LL Library visualizes a concept prototype for a library of loss landscapes. The loss landscapes featured are created with real data, using Resnet 20 arquitectures, with batch sizes of 16 and 128 and the Adam optimizer. This is part of an ongoing project. More variations of this visualization as well as images and videos of other visualizations are available at the Moving Lands and Still Lands galleries.

DRONE. This project is ongoing and new updates will be posted later on. Real Data visualization of a geometric convnet | Created together with Neural Concept SA | Some of the parameters of the project: L2 Loss, ADAM, BS=1, LR=0.0001 / 24588 Vertices. The video shows the activations of the convolutional layers inside a Geometric CNN extracting features from the surface of a drone, while the network is being trained to predict aerodynamic properties of the aircraft. The numeric field values are what the network is being trained to predict. they represent the pressure exerted by the air on the drone. The colors over the drone are the features that the geometric convnet is extracting in order to predict those air pressure values.

LR COASTER visualizes a learning rate stress test during the training of a convnet. We ride along the minimizer while exploring its nearby surroundings. I use extreme changes in the learning rate to illustrate how the morphology and dynamics of the loss landscape change in response to the changes in the learning rate. The resolution (300K loss values calculated per frame) allows us to explore the change in morphology. More details and related analysis about this and other visualizations will be published in the future.

Mode Connectivity. NeurIPS 2018 ARXIV/1802.10026. Optima of complex loss functions connected by simple curves over which training and test accuracy are nearly constant. Visualization data generated through a collaboration between Pavel Izmailov (@Pavel_Izmailov), Timur Garipov (@tim_garipov) and Javier Ideami (@ideami). Based on the paper by timur garipov, pavel izmailov, dmitrii podoprikhin, dmitry vetrov, andrew gordon wilson: https://arxiv.org/abs/1802.10026 | creative visualization produced by Javier Ideami. This is part of an ongoing collaboration with Pavel and Timur, more results coming soon.

ON A JOURNEY

Just as a photograph converts the 3 dimensions of every day life into a 2 dimensional surface and interprets that 3D “reality” from a certain angle and perspective and through certain filters, loss landscape visualizations transform the multidimensional weight space of optimization processes into a much lower dimensional representation which we also process in different ways and study from a variety of angles and perspectives.

In both cases, even though we are simplifying the underlying “reality”, we are producing representations which provide useful information and may trigger new insights.

Through a combination of different tools and strategies, the loss landscape project samples hundreds of thousands of loss values across weight space and builds moving visualizations that capture some of the mysteries of the training processes of deep neural networks. In the intersection of technology, A.I and art, the LL project makes use of the cutting edge fast.ai library and the latest 3d proyection, animation and video production technology to produce pieces that take us on a journey into the unknown.

Crafting the mission

The LL project crafting strategies are based on cutting edge artificial intelligence research combined with creative intuition. The mission is to explore the morphology and dynamics of these elusive creatures and inspire the community with visual pieces that make use of real data produced by deep learning training processes.

Every LL piece is carefully crafted with a combination of the finest tools and resources, from fast.ai to cutting edge 3D and movie production software.

Phase 1 is now completed and Phase 2 is currently being prepared.

xyz

Going deep